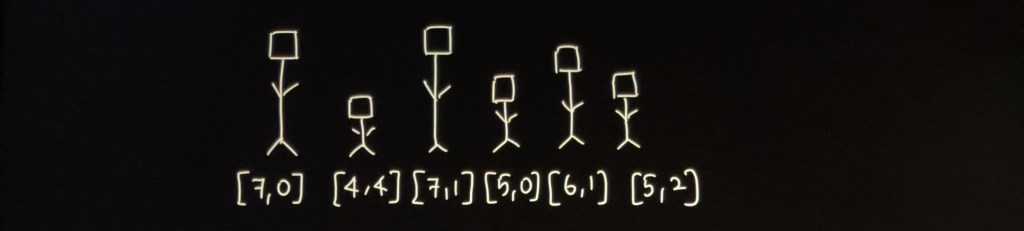

We are given the root of a BST and a target value to search for. If the target node exists, return the subtree rooted at that node, else return NULL.

Example: Value to search for = 2

4

/ \

2 7

/ \

1 3

Output:

2

/ \

1 3

Solution:

Binary Search Trees (BST) have this incredible property where an inorder traversal yields nodes in sorted order. This is because each node’s value is greater than all the values in its left subtree and lesser than all the values in its right subtree.

Armed with this knowledge, what we essentially do is to search for the given value in a sorted list. What is the most efficient way to search in a sorted list? Yes, binary search, where at each step we try to cut down our search space by half. In the case of a BST, this equals eliminating either the left or the right subtree of a node from our search space. Now this works well in a balanced BST where the number of nodes in the left and right subtrees are fairly equal. When the tree is skewed to the left or to the right, it’s the same as doing a linear search.

Our search algorithm is as follows:

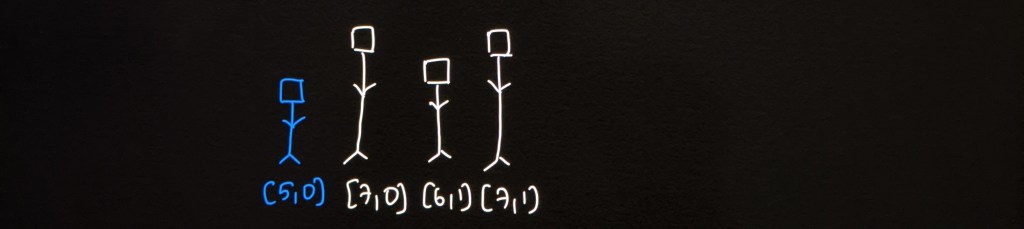

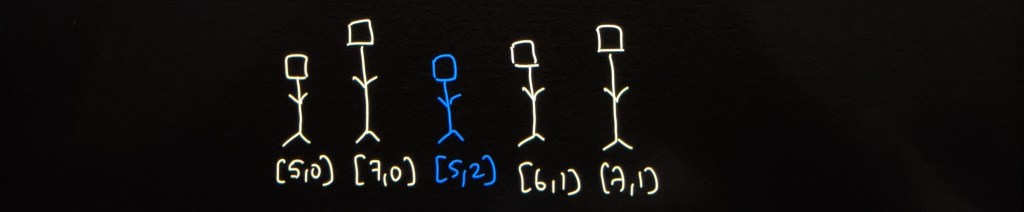

- Start at the root node. If the root = target, return root.

- If root < target, then the target might be in the right subtree. We then recursively search the right subtree only.

- If root > target, then the target might be in the left subtree. We then recursively search the left subtree only.

- If the value wasn’t found, return NULL.

Time Complexity: O(log N) in a balanced BST but O(N) in a skewed tree where N is the number of nodes in the tree.

Space Complexity: O(log N) for the use of recursive stack space. O(N) at the worst case when the tree is skewed.

Code:

def searchBST(self, root: TreeNode, val: int) -> TreeNode:

def searchHelper(root, val):

if not root:

return None

if root.val == val:

return root

if root.val < val:

return searchHelper(root.right, val)

else:

return searchHelper(root.left, val)

return searchHelper(root, val)

Hope you enjoyed this simple but delightful problem! Have a nice day!